21

What is Robots.txt in SEO?

Robots.txt is a fundamental file in SEO that helps control how search engines crawl and index your website. Understanding its role is crucial for optimizing website performance and ensuring that your valuable content is discoverable while keeping irrelevant or sensitive sections private. This guide dives deep into the purpose of robots.txt, how to implement it, and best practices to boost your site's SEO strategy.

When it comes to SEO (Search Engine Optimization), one of the most critical yet often overlooked elements is the robots.txt file. This small text file plays a significant role in how search engine bots, also known as web crawlers, interact with your website. While SEO is often focused on keyword optimization, content creation, and link building, understanding how to manage and control how search engines crawl your site can be a game-changer.

So, what is robots.txt in SEO, and how can it improve your site’s crawlability and overall SEO performance? In this comprehensive guide, we’ll cover everything you need to know about robots.txt, from its purpose to implementation and best practices.

1. What is Robots.txt?

The robots.txt file is a simple text file placed in the root directory of your website that provides instructions to search engine bots on which parts of your site they can or cannot crawl. Essentially, it serves as a roadmap for search engines, guiding them on how to interact with your site’s content.

For example, you may want to block certain pages or sections of your site from being crawled, such as admin pages, duplicate content, or test environments. By configuring a robots.txt file, you control which pages are indexed by search engines and which aren’t.

2. Why is Robots.txt Important for SEO?

In the world of SEO, crawl budget refers to the number of pages search engines will crawl on your site during a given time frame. Optimizing this budget is crucial for large websites, where some pages may not need to be crawled or indexed. A well-configured robots.txt file can help ensure that search engines focus on the most valuable and relevant content, improving overall site efficiency and performance.

Key Benefits of Robots.txt for SEO:

- Control Crawling: Keep unwanted sections of your site from being crawled.

- Optimize Crawl Budget: Direct bots to your high-value pages.

- Prevent Duplicate Content: Block duplicate or irrelevant pages from search engines.

- Secure Sensitive Information: Hide private data, like login pages, from being indexed.

3. How Does Robots.txt Work?

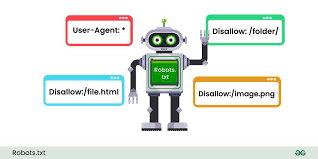

The robots.txt file works by providing instructions to search engine bots, such as Googlebot, Bingbot, or others, on how they should crawl and index your site. These instructions are given using the User-agent and Disallow directives.

- User-agent: Specifies which search engine bot the rule applies to. You can set rules for a specific bot (e.g., Googlebot) or apply the rule to all bots by using the wildcard "*".

- Disallow: Tells the bot which pages or sections it should not crawl.

Here’s a simple example of what a robots.txt file might look like:

javascript Copy codeUser-agent: * Disallow: /admin/ Disallow: /login/

This example tells all bots (represented by the asterisk "*") not to crawl the /admin/ and /login/ directories.

4. How to Create a Robots.txt File

Creating a robots.txt file is simple, but it must be done correctly to avoid unintentionally blocking important pages. Follow these steps to create one for your website:

Step 1: Open a Text Editor

Use a plain text editor such as Notepad (Windows) or TextEdit (Mac) to create the file. Do not use word processing software like Microsoft Word, as it may add hidden formatting.

Step 2: Add Directives

Specify which bots should follow the rules and which directories or pages should be restricted from crawling. For example:

javascript Copy codeUser-agent: * Disallow: /private/ Disallow: /temp/

Step 3: Save as robots.txt

Save the file as robots.txt (all lowercase) and upload it to the root directory of your website. This would be the same directory that contains your homepage (e.g., https://example.com/robots.txt).

Step 4: Test the File

After uploading, use the Google Search Console or other SEO tools to test your robots.txt file to ensure it's working as expected.

5. Best Practices for Robots.txt in SEO

While robots.txt is a powerful tool, it must be used carefully. Misconfiguration can lead to significant SEO issues, such as accidentally blocking your entire website from being crawled. Here are some best practices for implementing robots.txt:

1. Don’t Block Essential Pages

Never block important pages like your homepage, product pages, or blog content that you want search engines to crawl and index. Only block pages that don’t add SEO value, such as thank you pages or backend files.

2. Block Duplicate or Thin Content

Search engines dislike duplicate content or pages with little to no value. Block pages like tag archives, search results, or parameterized URLs that create duplicate versions of the same content.

3. Be Careful with Wildcards

Wildcards (*) and dollar signs ($) in robots.txt can be useful, but they should be used with caution. A small mistake can inadvertently block important sections of your site. Test thoroughly before implementing.

4. Regularly Review and Update

As your site grows or changes, your robots.txt file should be updated accordingly. Set a reminder to review it regularly to ensure it's still optimized for your current site structure.

6. Common Mistakes to Avoid with Robots.txt

Mistake 1: Blocking All Crawling

Some website owners mistakenly block all bots from crawling their entire site by using:

makefile Copy codeUser-agent: * Disallow: /

This will prevent search engines from indexing any of your pages, which can result in a disastrous drop in SEO rankings.

Mistake 2: Blocking CSS or JavaScript

In the past, it was common to block CSS and JavaScript files from being crawled. However, search engines like Google now use these resources to understand how a page looks and functions. Blocking them can hurt your rankings.

Mistake 3: Relying Solely on Robots.txt for Security

The robots.txt file is not a security measure. If you need to protect sensitive data, such as login pages or personal files, use proper authentication methods rather than relying on robots.txt to keep bots out.

7. How Robots.txt Interacts with Meta Tags

Besides robots.txt, you can use meta tags to control how individual pages are crawled and indexed. The meta robots tag allows you to set directives like noindex, nofollow, and noarchive at the page level, providing more granular control than robots.txt.

Here’s an example of a meta robots tag placed in the HTML of a page:

html Copy code<meta name="robots" content="noindex, nofollow">

This tells search engines not to index the page and not to follow any links on it.

8. Robots.txt vs. Sitemap: What’s the Difference?

While the robots.txt file controls what search engines should avoid, a sitemap does the opposite. A sitemap.xml file is a list of all the pages you want search engines to crawl and index. Combining both files allows you to guide bots more effectively — blocking unnecessary pages while emphasizing important ones.

For example, your robots.txt file may block admin pages, while your sitemap highlights your blog posts and product pages for crawling.

9. Testing Your Robots.txt with SEO Tools

Using tools like Google Search Console, you can test your robots.txt file to ensure it’s functioning as expected. Navigate to the robots.txt Tester under Crawl > robots.txt Tester, where you can check for errors or misconfigurations.

Additionally, platforms like Screaming Frog and Ahrefs offer SEO auditing tools to assess whether your robots.txt file is impacting your site’s performance.

10. When Should You Use Robots.txt?

Not every website needs an elaborate robots.txt configuration. However, if your site contains sections that should remain hidden from search engines, such as private data, low-value pages, or duplicate content, you should implement a well-thought-out robots.txt file.

Some common scenarios for using robots.txt include:

- E-commerce sites: To block search engines from crawling checkout pages.

- Development sites: To prevent incomplete pages from being indexed.

- Large sites: To manage crawl budget effectively.

Conclusion

The robots.txt file is a crucial element in SEO that allows you to control how search engines crawl and index your website. While it may seem simple, its impact on your site’s SEO strategy can be profound. Properly configuring this file can improve your crawl budget, prevent duplicate content from being indexed, and secure sensitive sections of your site.

Contact

Missing something?

Feel free to request missing tools or give some feedback using our contact form.

Contact Us