28

SEO Robots.txt: Optimize Your Website Crawling with a Free Tool

Learn how to create an SEO-friendly robots.txt file to manage search engine crawling and indexing for your website. Use the free Robots.txt Builder Tool from WebTigersAI to create, test, and optimize your robots.txt file effortlessly.

What is Robots.txt and Why is It Important for SEO?

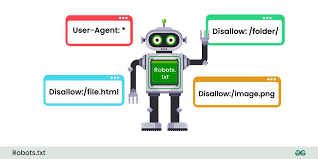

The robots.txt file is a key element of any SEO strategy. This simple text file, placed in your website’s root directory, informs search engine crawlers about which pages or directories they can or cannot index. By managing what search engines can access, you can prevent unnecessary pages from being indexed and ensure that only valuable content appears in search results.

For example, you can block certain directories like admin panels, duplicate content, or irrelevant pages to boost your site's overall SEO performance.

How Does Robots.txt Affect SEO?

A well-structured robots.txt file can positively impact your SEO by:

- Improving Crawl Efficiency: By disallowing unimportant pages, you allow search engines to focus their resources on crawling your important pages.

- Avoiding Duplicate Content: Blocking duplicate or redundant pages helps ensure search engines don’t penalize your site for duplicate content.

- Controlling Indexation: You can prevent private or temporary pages from being indexed, ensuring that only relevant content appears in search results.

However, using robots.txt incorrectly can prevent critical pages from being indexed, which can hurt your SEO. That’s why it’s essential to use an effective strategy when creating this file.

Best Practices for Creating an SEO-Friendly Robots.txt File

- Allow Search Engines to Crawl Important Pages

Make sure that key pages like your homepage, blog posts, and category pages are crawlable. For example:

txt Copy codeUser-agent: * Disallow:

This allows all crawlers to index every page of your site.

- Block Unnecessary or Duplicate Pages

Disallow pages that don’t add value to search engines, such as login pages or duplicate content. For example:

txt Copy codeUser-agent: * Disallow: /admin/ Disallow: /login/ Disallow: /search/

- Block Crawling of Sensitive Data

If your site has private directories that should never be indexed, such as payment systems or private areas, disallow them:

txt Copy codeUser-agent: * Disallow: /private/ Disallow: /payment/

- Allow Crawling of Important Sections Within Disallowed Directories

In some cases, you may want to allow certain files or pages in a directory that is otherwise blocked. For example:

txt Copy codeUser-agent: * Disallow: /images/ Allow: /images/logo.jpg

- Use a Robots.txt Tester

Once you’ve created your robots.txt file, it’s essential to test it using tools like Google Search Console’s "Robots.txt Tester." This helps ensure that your instructions are working as intended.

Create Your SEO-Friendly Robots.txt with the Free Tool

Manually creating a robots.txt file can be tricky, especially if you’re unsure of the syntax. That’s why the Online Robots.txt Builder Tool from WebTigersAI is a perfect solution. It’s a free and easy-to-use tool designed to help you create a fully optimized robots.txt file in just a few steps.

With the WebTigersAI tool, you can:

- Quickly generate a customized robots.txt file

- Add, disallow, or allow specific pages and directories

- Optimize your file for SEO

- Test your file to ensure it works properly

Using this tool, you’ll save time and avoid common mistakes that could affect your SEO performance.

How to Use the Free Robots.txt Builder Tool

- Visit the Tool: Go to the Online Robots.txt Builder Tool.

- Choose Your Preferences: Select whether you want to block or allow specific pages or directories.

- Generate the File: The tool will automatically create the robots.txt file for you.

- Test and Upload: After generating the file, upload it to your website's root directory and use the "Robots.txt Tester" in Google Search Console to check its functionality.

Conclusion

An optimized robots.txt file is crucial for any SEO strategy, helping you control how search engines crawl and index your site. By following best practices and using the free Robots.txt Builder Tool from WebTigersAI, you can easily create an SEO-friendly file that ensures your site performs well in search engine rankings.

Contact

Missing something?

Feel free to request missing tools or give some feedback using our contact form.

Contact Us