28

How to Use Robots.txt to Disallow All: A Complete Guide

Learn how to use the robots.txt file to disallow all search engine crawlers from indexing your website. This guide covers the steps, best practices, and why you might need to block all crawlers.

What is Robots.txt?

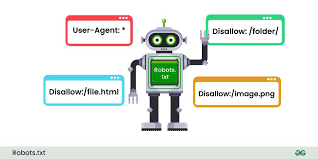

The robots.txt file is a simple text file that resides in the root directory of your website. It acts as a guide for search engine crawlers, telling them which pages they can and cannot access on your website. This file is crucial for controlling how search engines interact with your site.

Why Use Robots.txt to Disallow All?

In some cases, you might want to prevent all search engines from crawling your website. This could be useful for:

- Websites under development

- Private websites

- Staging environments

- Testing new features

Using the robots.txt file to disallow all crawlers ensures that your website remains out of search engine results and keeps your content private or unindexed.

How to Disallow All Search Engine Crawlers

To block all search engines from indexing your entire website, add the following lines to your robots.txt file:

txt Copy codeUser-agent: * Disallow: /

Here's what each part means:

- User-agent: * – The asterisk (*) is a wildcard that applies to all crawlers.

- Disallow: / – This blocks access to the entire website by preventing crawlers from visiting any URL under your domain.

How to Create a Robots.txt File

- Open a text editor: Use a simple text editor like Notepad (Windows) or TextEdit (Mac).

- Add the disallow code: Paste the code mentioned above.

- Save the file as robots.txt: Make sure the file is saved as plain text.

- Upload the file: Place the robots.txt file in the root directory of your website, typically at www.yourdomain.com/robots.txt.

Testing Your Robots.txt File

After uploading the robots.txt file, it's essential to test if it's working correctly. You can use tools like Google Search Console’s "Robots Testing Tool" to verify that your site is successfully blocking crawlers.

When Should You NOT Use Robots.txt to Disallow All?

Blocking all crawlers can have significant SEO implications. If your goal is to rank your website, avoid using a blanket disallow directive. This is only recommended for websites that you never want indexed or accessed by search engines. If used incorrectly, it can harm your visibility and traffic.

Conclusion

Using the robots.txt file to disallow all crawlers is a powerful way to maintain privacy or control access to your website. However, it's essential to use it cautiously, especially if you want your site to appear in search engine results. Always ensure that you understand the implications of blocking crawlers before implementing this command.

Contact

Missing something?

Feel free to request missing tools or give some feedback using our contact form.

Contact Us